Stealing machine learning models via prediction apis pdf

This aper is included in the Proceedings o the 25th SENI Securit Symposium August 0–12 01 Austin X ISBN 78-1-931971-32-4 Open access to the Proceedings o the

arXiv Paper Spotlight: Automated Inference on Criminality Using Face Images. Previous post. Next post http likes 26. Tags: Academics, arXiv, Classification, Crime, Face Recognition, Image Recognition, Machine Learning. This recent paper addresses the use of still facial images in an attempt to differentiate criminals from non-criminals, doing so with the help of 4 different classifiers

Stealing Machine Learning Models via Prediction APIs Wired magazine just published an article with the interesting title How to Steal an AI, where the author explores the… blog.bigml.com Attacking Machine Learning with Adversarial Examples

stock return prediction, we perform a comparative analysis of methods in the machine learning repertoire, including generalized linear models, dimension reduction, boosted regression trees, random forests, and neural networks.

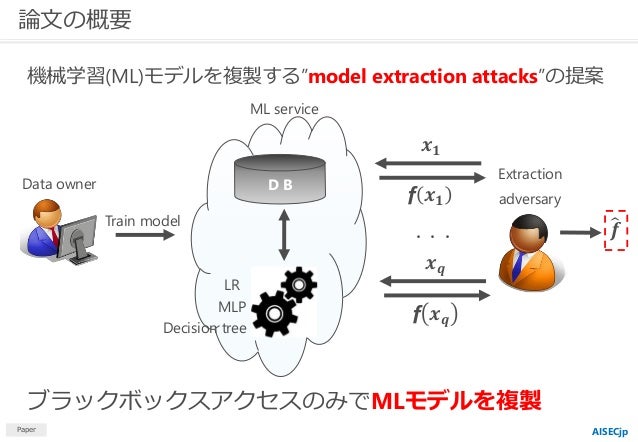

The central premise of the researchers is that, despite their confidentiality, machine learning models which have public-facing APIs are vulnerable to model extraction attacks, which attempt to “steal the ingredients” and duplicate functionality.

PDF; Abstract. Machine Learning (ML) models are increasingly deployed in the wild to perform a wide range of tasks. In this work, we ask to what extent can an adversary steal functionality of such “victim” models based solely on blackbox interactions: image in, predictions out. In contrast to prior work, we present an adversary lacking knowledge of train/test data used by the model, its

Machine Learning From Streaming Data: Two Problems, Two Solutions, Two Concerns, and Two Lessons by charleslparker on March 12, 2013 There’s a lot of hype these days around predictive analytics, and maybe even more hype around the topics of “real-time predictive analytics” or “predictive analytics on streaming data”.

M. Ribeiro, K. Grolinger, M.A.M Capretz, MLaaS: Machine Learning as a Service, International Conference on Machine Learning and Applications, 2015. c 2015 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new

Stealing Machine Learning Models via Prediction APIs arxiv.org. The title probably already explains the idea: This paper collects techniques to ‘steal’ models that are exposed through a query interface without actually knowing anything about the models architecture.

Python implementation of extraction attacks against Machine Learning models, as described in the following paper: Stealing Machine Learning Models via Prediction APIs Florian Tramèr, Fan Zhang, Ari Juels, Michael Reiter and Thomas Ristenpart

Adversary Goal. Adversary goal is the final effect that the adversary wants to achieve. In this paper, the adversary has two goals. One goal is stealing the machine learning models of targeted IDSs, the other is poisoning against the targeted IDSs.

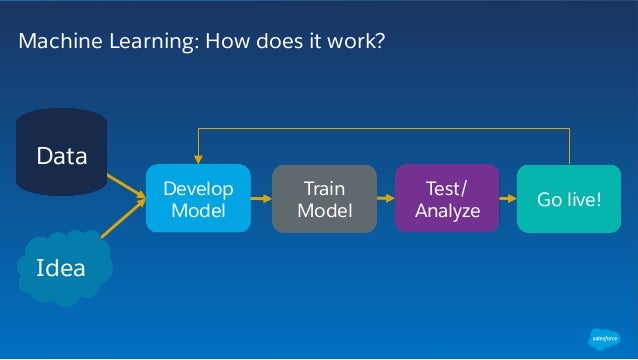

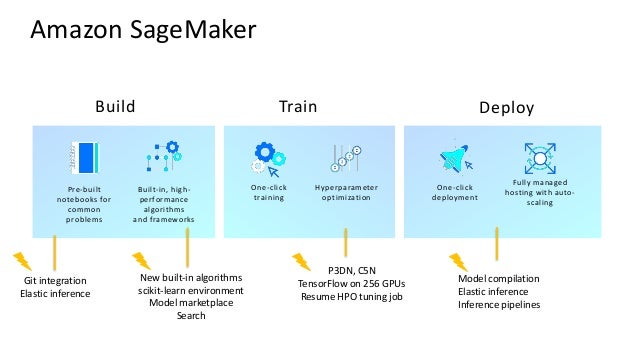

AWS Documentation » Amazon Machine Learning » Developer Guide » Training ML Models Training ML Models The process of training an ML model involves providing an ML algorithm (that is, the learning algorithm ) with training data to learn from.

Stealing Machine Learning Models via Prediction APIs By Florian Tramèr, Fan Zhang, Ari Juels, Michael K. Reiter and Thomas Ristenpart Download PDF (2 MB)

CS595D is a graduate computer science seminar that will explore topics in AI safety and bias in machine learning. These are both fundamental problems in AI …

Making Predictions over HTTP with R the raybuhr blog

Machine Learning Methods for Predicting HLA–Peptide

Tutorial: Gaussian process models for machine learning Ed Snelson (snelson@gatsby.ucl.ac.uk) Gatsby Computational Neuroscience Unit, UCL 26th October 2006

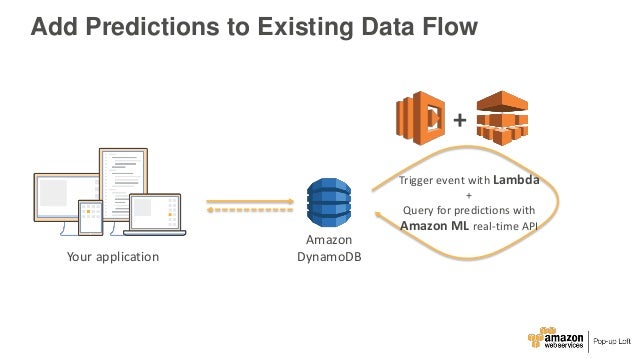

Amazon Machine Learning is a service that allows to develop predictive applications by using algorithms, mathematical models based on the user’s data. Amazon Machine Learning reads data through Amazon S3, Redshift and RDS, then visualizes the data through the AWS Management Console and the Amazon Machine Learning API.

Stealing Machine Learning Models via Prediction APIs UsenixSecurity’16 August 11th, 2016 Machine Learning (ML) Systems 2 (1) Gather labeled data

introduce “Stealing Machine Learning Models via Prediction APIs” 1. 2016.12.06 AISECjp #7 Presented by Isao Takaesu 論文紹介 Stealing Machine Learning Models via Prediction APIs Part. 1

Predicting the Price of Used Cars using Machine Learning Techniques 755 better able to deal with very high dimensional data (number of features used to …

Stealing Machine Learning Models via Prediction APIs Florian Tramèr 1, Fan Zhang2, Ari Juels3, Michael Reiter4, Thomas Ristenpart3 1EPFL, 2Cornell, 3Cornell Tech, 4UNC

Machine Learning for Financial Market Prediction Tristan Fletcher PhD Thesis Computer Science University College London . Declaration I, Tristan Fletcher, confirm that the work presented in this thesis is my own. Where information has been derived from other sources, I confirm that this has been indicated in the thesis. 1. Abstract The usage of machine learning techniques for the prediction

Stealing Machine Learning Models via Prediction APIs Florian Tramer` EPFL Fan Zhang Cornell University Ari Juels Cornell Tech, Jacobs Institute Michael K. Reiter

Through full engagement with the sort of real-world problems data-wranglers face, you’ll learn to apply machine learning methods to deal with common tasks, including classification, prediction, forecasting, market analysis, and clustering. Transform the way you think about data; discover machine learning …

proposed machine learning model using Random Forest (RF), trend and periodicity features of BP time-series are extracted to improve prediction. To further enhance the performance of the prediction model, we propose RF with Feature Selection (RFFS), which performs RF-based feature selection to filter out unnecessary features. Our experimental results demonstrate that the proposed approach is

Abstract. We synthesize the field of machine learning with the canonical problem of empirical asset pricing: Measuring asset risk premia. In the familiar empirical setting of cross section and time series stock return prediction, we perform a comparative analysis of methods in the machine learning repertoire, including generalize linear models

Stealing Machine Learning Models via Prediction APIs Florian Tramèr , Fan Zhang , Ari Juels , Michael Reiter , and Thomas Ristenpart USENIX Security Symposium, 2016

Abstract: Machine learning (ML) models may be deemed confidential due to their sensitive training data, commercial value, or use in security applications.

Using R as a Production Machine Learning Language (Part I) There’s often confusion amoung the data science and machine learning crowd about the quality of R as a production level language for deploying predictive models.

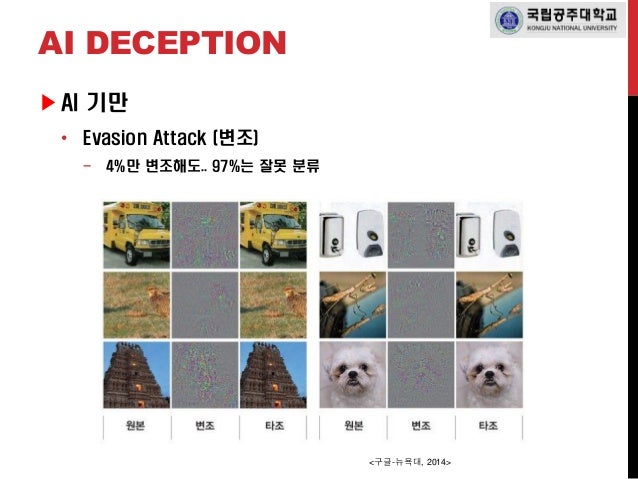

Evasion attacks against machine learning at test time Automatically Evading Classifiers A Case Study on PDF Malware Classifiers Looking at the Bag is not Enough to Find the Bomb: An Evasion of Structural Methods for Malicious PDF Files Detection

Stealing Machine Learning Models via Prediction APIs arxiv.org – Share The title probably already explains the idea: This paper collects techniques to ‘steal’ models that are exposed through a query interface without actually knowing anything about the models architecture.

In a paper utilizing classical machine learning techniques [1], a student at University of Wisconsin at Madison topped out prediction at 66.81% utilizing a maximum likelihood method.

OpenMP tasking profiling APIs OpenMP profiling tool and performance analysis A hybrid machine learning model for adaptive prediction

Last month, Florian Tramèr, a PhD student at Stanford, presented joint work with Fan Zhang, Ari Juels, Michael Reiter, and Thomas Ristenpart on “Stealing Machine Learning Models via Prediction

Oblivious Neural Network Predictions via MiniONN

Oftentimes, a prediction from a machine learning model A is made accessible to a wide variety of systems, either at runtime or by writing to logs that may later be consumed by other systems. In more classical software engineering, these issues are referred to as visibility debt [7].

multiple machine learning models. Model stacking is an efficient ensemble method in which the Model stacking is an efficient ensemble method in which the predictions that are generated by using different learning algorithms are used as inputs in a second-level

11/10/2015 · IEDB recommended a consensus approach on its server. 35, 62, 63 In IEDB, the binding prediction is made from four PSSM-based models for Class I HLAs, while for Class II HLAs, the result comes from nine models including several PSSM-based models and machine learning models.

Predicting the price of Bitcoin using Machine Learning Sean McNally x15021581 MSc Reseach Project in Data Analytics 9th September 2016 Abstract This research is

Most of the machine learning models that are of interest to social scientists are supervised learning models. Here the practice is to segment the data into two groups: the training set , which is used to build the model, and the test set , which is used to judge the model’s performance.

While this level of attack requires a very determined and sophisticated adversary, researchers have already found a number of novel techniques used to steal ML models with just a few hundred queries (see for example the paper “Stealing Machine Learning Models via Prediction APIs”).

Managing the Machine Learning Lifecycle presented by Giulio Zhou [pptx, pdf] Incrementally Maintaining Classification Using an RDBMS presented by Noah Golmant [ pptx , pdf ] The LASER, Clipper, and TensorFlow Prediction Serving Systems presented by Daniel Crankshaw [ pptx , pdf ]

Machine learning (ML) models may be deemed confidential due to their sensitive training data, commercial value, or use in security applications.

Abstract Machine learning (ML) models may be deemed confidential due to their sensitive training data, commercial value, or use in security applications. – bitmore fitness tracker watch user manual Machine learning models hosted in a cloud service are increasingly popular but risk privacy: clients sending prediction requests to the service need to disclose potentially sensitive information.

Wired magazine just published an article with the interesting title How to Steal an AI, where the author explores the topic of reverse engineering Machine Learning algorithms based on a recently published academic paper: Stealing Machine Learning Models via Prediction APIs.

Stealing Machine Learning Models via Prediction APIs Florian Tramèr, Fan Zhang, Ari Juels, Michael Reiter and Thomas Ristenpart USENIX Security Symposium, 2016.

Machine learning models also have privacy vulnerabilities. Research has shown that training data can be reconstructed from the model. The principle behind model inversion is simple: using features

Machine Learning – use computers to probe the data for structure, even if we do not have a theory of what that structure looks like. Deep learning – combines advances in computing power and …

Very interesting. It makes sense that you could learn the model for something like classification which has a cut and dry answer. Just brute force queries at the API, log the results, and start working on your own model based on theirs.

05/13/16: Stealing Machine Learning Models via Prediction APIs is accepted to USENIX Security’16. Media Coverage Cornell’s Town Crier Acquired By Chainlink To Expand Decentralized Oracle Network by Forbes on Nov 1, 2018, featured Town Crier

(PDF) Stealing Machine Learning Models via Prediction APIs

Extend your machine learning models using simple techniques to create compelling and interactive web dashboards Leverage the Flask web framework for rapid prototyping of your Python models and ideas Create dynamic content powered by regression coefficients, logistic regressions, gradient boosting machines, Bayesian classifications, and more

Stealing Machine Learning Models via Prediction APIs [1609.02943] Stealing Machine Learning Models via Prediction APIs Abstract: Machine learning (ML) models may be deemed confidential due to their sensitive training data, commercial value, or use in security applications.

the API to send the input image and receive the corresponding output. This is the setting of machine learning as a service. This work considers a black box setting in which the adversary does not have access to the model internals and can only query the trained model and obtain the corresponding output predictions. The adversary sends an input to the target model and measures the response time

One focus of the machine learning research community has been on developing models that make accurate predictions, as progress was in part measured by results on benchmark datasets. In this context, accuracy denotes the fraction of test inputs that a model processes correctly—the proportion of

model on a fixed dataset size when using 16 and 32 machines, and exhibits stronger scaling properties, much closer to the gold standard of linear scaling for these algorithms.

Machine Learning Techniques—Reductions Between Prediction Quality Metrics 3 to another, for example). The space that examples live in is called the feature space,

Machine Learning Mastery with R is a great book for anyone looking to get started with machine learning. The book gives details how each step of a machine learning project should go: from descriptive statistics, to model selection and tuning, to predictions. These details are not very technical however, which allows anyone who does not have a strong background in machine learning to learn …

The next key concept is… The bias-variance tradeoff. As you add variables, interactions, relax linearity assumptions, add higher-order terms, and generally make your model more complex, your model should eventually fit the in-sample data pretty well.

How to Steal a Predictive Model Posted on October 3, 2016 by Thomas W. Dinsmore 3 comments In the Proceedings of the 25th USENIX Security Symposium, Florian Tramer et. al. describe how to “steal” machine learning models via Prediction APIs.

The recent paper at hand approaches explaining deep learning from a different perspective, that of physics, and discusses the role of “cheap learning” (parameter reduction) and how it relates back to this innovative perspective.

Knockoff Nets Stealing Functionality of Black-Box Models

Machine Learning for Financial Market Prediction — Time

“Machine learning (ML) models may be deemed confidential due to their sensitive training data, commercial value, or use in security applications. Increasingly often, confidential ML models are being deployed with publicly accessible query interfaces. ML-as-a-service (“predictive analytics”) systems are an example: Some allow users to train models on potentially sensitive data and charge

In a paper they released earlier this month titled “Stealing Machine Learning Models via Prediction APIs,” a team of computer scientists at Cornell Tech, the Swiss institute EPFL in Lausanne

Many machine learning models in recent years have been released as open source software, because the companies developing them want users to improve the code and to deploy the models on their

More sophisticated machine learning models (that include non-linearities) seem to provide better prediction (e.g., lower MSE), but their ability to generate higher Sharpe ratios is questionable. Complex machine learning models require a lot of data and a lot of samples.

Prediction Serving GitHub Pages

Paper – Stealing Machine Learning Models via Prediction

She enjoys following the latest developments in machine learning, Stealing Machine Learning Models via Prediction APIs (Tramèr et al., 2016) Check out these DataCamp courses. Natural Language Processing Fundamentals in Python. Learn fundamental natural language processing techniques using Python and how to apply them to extract insights from real-world text data. …

Amazon Web Services Machine Learning Tutorials Point

“Machine Learning Techniques—Reductions Between Prediction

arXiv Paper Spotlight Automated Inference on Criminality

– How Cybercriminals are Attacking Machine Learning Lastline

MLaaS Machine Learning as a Service

MLlib Scalable Machine Learning on Spark

How to Steal a Predictive Model ML/AI

introduce “Stealing Machine Learning Models via Prediction

ftramer/Steal-ML Model extraction attacks on Machine

Very interesting. It makes sense that you could learn the model for something like classification which has a cut and dry answer. Just brute force queries at the API, log the results, and start working on your own model based on theirs.

Predicting the Price of Used Cars using Machine Learning Techniques 755 better able to deal with very high dimensional data (number of features used to …

Amazon Machine Learning is a service that allows to develop predictive applications by using algorithms, mathematical models based on the user’s data. Amazon Machine Learning reads data through Amazon S3, Redshift and RDS, then visualizes the data through the AWS Management Console and the Amazon Machine Learning API.

PDF; Abstract. Machine Learning (ML) models are increasingly deployed in the wild to perform a wide range of tasks. In this work, we ask to what extent can an adversary steal functionality of such “victim” models based solely on blackbox interactions: image in, predictions out. In contrast to prior work, we present an adversary lacking knowledge of train/test data used by the model, its

Stealing Machine Learning Models via Prediction APIs Florian Tramèr , Fan Zhang , Ari Juels , Michael Reiter , and Thomas Ristenpart USENIX Security Symposium, 2016

M. Ribeiro, K. Grolinger, M.A.M Capretz, MLaaS: Machine Learning as a Service, International Conference on Machine Learning and Applications, 2015. c 2015 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new

Machine Learning with R Second Edition pdf – Free IT

arXiv Paper Spotlight Stealing Machine Learning Models

Using R as a Production Machine Learning Language (Part I) There’s often confusion amoung the data science and machine learning crowd about the quality of R as a production level language for deploying predictive models.

Many machine learning models in recent years have been released as open source software, because the companies developing them want users to improve the code and to deploy the models on their

The recent paper at hand approaches explaining deep learning from a different perspective, that of physics, and discusses the role of “cheap learning” (parameter reduction) and how it relates back to this innovative perspective.

introduce “Stealing Machine Learning Models via Prediction APIs” 1. 2016.12.06 AISECjp #7 Presented by Isao Takaesu 論文紹介 Stealing Machine Learning Models via Prediction APIs Part. 1

Machine Learning Techniques—Reductions Between Prediction Quality Metrics 3 to another, for example). The space that examples live in is called the feature space,

Stealing Machine Learning Models via Prediction APIs [1609.02943] Stealing Machine Learning Models via Prediction APIs Abstract: Machine learning (ML) models may be deemed confidential due to their sensitive training data, commercial value, or use in security applications.

Machine Learning – use computers to probe the data for structure, even if we do not have a theory of what that structure looks like. Deep learning – combines advances in computing power and …

One focus of the machine learning research community has been on developing models that make accurate predictions, as progress was in part measured by results on benchmark datasets. In this context, accuracy denotes the fraction of test inputs that a model processes correctly—the proportion of

Predicting the Price of Used Cars using Machine Learning Techniques 755 better able to deal with very high dimensional data (number of features used to …

Stealing Machine Learning Models via Prediction APIs Florian Tramer` EPFL Fan Zhang Cornell University Ari Juels Cornell Tech, Jacobs Institute Michael K. Reiter

In a paper they released earlier this month titled “Stealing Machine Learning Models via Prediction APIs,” a team of computer scientists at Cornell Tech, the Swiss institute EPFL in Lausanne

Wired magazine just published an article with the interesting title How to Steal an AI, where the author explores the topic of reverse engineering Machine Learning algorithms based on a recently published academic paper: Stealing Machine Learning Models via Prediction APIs.

Stealing Machine Learning Models via Prediction APIs By Florian Tramèr, Fan Zhang, Ari Juels, Michael K. Reiter and Thomas Ristenpart Download PDF (2 MB)

Machine learning models also have privacy vulnerabilities. Research has shown that training data can be reconstructed from the model. The principle behind model inversion is simple: using features

Stealing Machine Learning Models via Prediction APIs

GitHub RandomAdversary/Awesome-AI-Security #AISecurity

“Machine learning (ML) models may be deemed confidential due to their sensitive training data, commercial value, or use in security applications. Increasingly often, confidential ML models are being deployed with publicly accessible query interfaces. ML-as-a-service (“predictive analytics”) systems are an example: Some allow users to train models on potentially sensitive data and charge

Machine learning models also have privacy vulnerabilities. Research has shown that training data can be reconstructed from the model. The principle behind model inversion is simple: using features

Oftentimes, a prediction from a machine learning model A is made accessible to a wide variety of systems, either at runtime or by writing to logs that may later be consumed by other systems. In more classical software engineering, these issues are referred to as visibility debt [7].

multiple machine learning models. Model stacking is an efficient ensemble method in which the Model stacking is an efficient ensemble method in which the predictions that are generated by using different learning algorithms are used as inputs in a second-level

OpenMP tasking profiling APIs OpenMP profiling tool and performance analysis A hybrid machine learning model for adaptive prediction

Abstract: Machine learning (ML) models may be deemed confidential due to their sensitive training data, commercial value, or use in security applications.

Most of the machine learning models that are of interest to social scientists are supervised learning models. Here the practice is to segment the data into two groups: the training set , which is used to build the model, and the test set , which is used to judge the model’s performance.

Evasion attacks against machine learning at test time Automatically Evading Classifiers A Case Study on PDF Malware Classifiers Looking at the Bag is not Enough to Find the Bomb: An Evasion of Structural Methods for Malicious PDF Files Detection

stock return prediction, we perform a comparative analysis of methods in the machine learning repertoire, including generalized linear models, dimension reduction, boosted regression trees, random forests, and neural networks.

05/13/16: Stealing Machine Learning Models via Prediction APIs is accepted to USENIX Security’16. Media Coverage Cornell’s Town Crier Acquired By Chainlink To Expand Decentralized Oracle Network by Forbes on Nov 1, 2018, featured Town Crier

Stealing Machine Learning Models via Prediction APIs [1609.02943] Stealing Machine Learning Models via Prediction APIs Abstract: Machine learning (ML) models may be deemed confidential due to their sensitive training data, commercial value, or use in security applications.

Stealing Machine Learning Models via Prediction APIs arxiv.org. The title probably already explains the idea: This paper collects techniques to ‘steal’ models that are exposed through a query interface without actually knowing anything about the models architecture.

Predicting the price of Bitcoin using Machine Learning Sean McNally x15021581 MSc Reseach Project in Data Analytics 9th September 2016 Abstract This research is

Paper – Stealing Machine Learning Models via Prediction

Knockoff Nets Stealing Functionality of Black-Box Models

the API to send the input image and receive the corresponding output. This is the setting of machine learning as a service. This work considers a black box setting in which the adversary does not have access to the model internals and can only query the trained model and obtain the corresponding output predictions. The adversary sends an input to the target model and measures the response time

model on a fixed dataset size when using 16 and 32 machines, and exhibits stronger scaling properties, much closer to the gold standard of linear scaling for these algorithms.

Machine Learning for Financial Market Prediction Tristan Fletcher PhD Thesis Computer Science University College London . Declaration I, Tristan Fletcher, confirm that the work presented in this thesis is my own. Where information has been derived from other sources, I confirm that this has been indicated in the thesis. 1. Abstract The usage of machine learning techniques for the prediction

Machine learning models also have privacy vulnerabilities. Research has shown that training data can be reconstructed from the model. The principle behind model inversion is simple: using features

How to Steal a Predictive Model Posted on October 3, 2016 by Thomas W. Dinsmore 3 comments In the Proceedings of the 25th USENIX Security Symposium, Florian Tramer et. al. describe how to “steal” machine learning models via Prediction APIs.

Adversary Goal. Adversary goal is the final effect that the adversary wants to achieve. In this paper, the adversary has two goals. One goal is stealing the machine learning models of targeted IDSs, the other is poisoning against the targeted IDSs.

Python implementation of extraction attacks against Machine Learning models, as described in the following paper: Stealing Machine Learning Models via Prediction APIs Florian Tramèr, Fan Zhang, Ari Juels, Michael Reiter and Thomas Ristenpart

Predicting the price of Bitcoin using Machine Learning Sean McNally x15021581 MSc Reseach Project in Data Analytics 9th September 2016 Abstract This research is

(PDF) Stealing Machine Learning Models via Prediction APIs

Machine Learning Mastery With R

Stealing Machine Learning Models via Prediction APIs Florian Tramèr , Fan Zhang , Ari Juels , Michael Reiter , and Thomas Ristenpart USENIX Security Symposium, 2016

11/10/2015 · IEDB recommended a consensus approach on its server. 35, 62, 63 In IEDB, the binding prediction is made from four PSSM-based models for Class I HLAs, while for Class II HLAs, the result comes from nine models including several PSSM-based models and machine learning models.

The recent paper at hand approaches explaining deep learning from a different perspective, that of physics, and discusses the role of “cheap learning” (parameter reduction) and how it relates back to this innovative perspective.

the API to send the input image and receive the corresponding output. This is the setting of machine learning as a service. This work considers a black box setting in which the adversary does not have access to the model internals and can only query the trained model and obtain the corresponding output predictions. The adversary sends an input to the target model and measures the response time

Using R as a Production Machine Learning Language (Part I) There’s often confusion amoung the data science and machine learning crowd about the quality of R as a production level language for deploying predictive models.

Stealing Machine Learning Models via Prediction APIs [1609.02943] Stealing Machine Learning Models via Prediction APIs Abstract: Machine learning (ML) models may be deemed confidential due to their sensitive training data, commercial value, or use in security applications.

05/13/16: Stealing Machine Learning Models via Prediction APIs is accepted to USENIX Security’16. Media Coverage Cornell’s Town Crier Acquired By Chainlink To Expand Decentralized Oracle Network by Forbes on Nov 1, 2018, featured Town Crier

Abstract Machine learning (ML) models may be deemed confidential due to their sensitive training data, commercial value, or use in security applications.

Stealing Machine Learning Models via Prediction APIs Florian Tramer` EPFL Fan Zhang Cornell University Ari Juels Cornell Tech, Jacobs Institute Michael K. Reiter

stock return prediction, we perform a comparative analysis of methods in the machine learning repertoire, including generalized linear models, dimension reduction, boosted regression trees, random forests, and neural networks.

She enjoys following the latest developments in machine learning, Stealing Machine Learning Models via Prediction APIs (Tramèr et al., 2016) Check out these DataCamp courses. Natural Language Processing Fundamentals in Python. Learn fundamental natural language processing techniques using Python and how to apply them to extract insights from real-world text data. …

arXiv Paper Spotlight: Automated Inference on Criminality Using Face Images. Previous post. Next post http likes 26. Tags: Academics, arXiv, Classification, Crime, Face Recognition, Image Recognition, Machine Learning. This recent paper addresses the use of still facial images in an attempt to differentiate criminals from non-criminals, doing so with the help of 4 different classifiers

Evasion attacks against machine learning at test time Automatically Evading Classifiers A Case Study on PDF Malware Classifiers Looking at the Bag is not Enough to Find the Bomb: An Evasion of Structural Methods for Malicious PDF Files Detection

The central premise of the researchers is that, despite their confidentiality, machine learning models which have public-facing APIs are vulnerable to model extraction attacks, which attempt to “steal the ingredients” and duplicate functionality.

Stealing Machine Learning Models via Prediction APIs arxiv.org. The title probably already explains the idea: This paper collects techniques to ‘steal’ models that are exposed through a query interface without actually knowing anything about the models architecture.

Stealing Machine Learning Models via Prediction APIs Revue

Researchers Show How to “Steal” AI from Amazon’s Machine

The central premise of the researchers is that, despite their confidentiality, machine learning models which have public-facing APIs are vulnerable to model extraction attacks, which attempt to “steal the ingredients” and duplicate functionality.

Stealing Machine Learning Models via Prediction APIs Florian Tramer` EPFL Fan Zhang Cornell University Ari Juels Cornell Tech, Jacobs Institute Michael K. Reiter

Evasion attacks against machine learning at test time Automatically Evading Classifiers A Case Study on PDF Malware Classifiers Looking at the Bag is not Enough to Find the Bomb: An Evasion of Structural Methods for Malicious PDF Files Detection

Abstract. We synthesize the field of machine learning with the canonical problem of empirical asset pricing: Measuring asset risk premia. In the familiar empirical setting of cross section and time series stock return prediction, we perform a comparative analysis of methods in the machine learning repertoire, including generalize linear models

arXiv Paper Spotlight: Automated Inference on Criminality Using Face Images. Previous post. Next post http likes 26. Tags: Academics, arXiv, Classification, Crime, Face Recognition, Image Recognition, Machine Learning. This recent paper addresses the use of still facial images in an attempt to differentiate criminals from non-criminals, doing so with the help of 4 different classifiers

Machine learning (ML) models may be deemed confidential due to their sensitive training data, commercial value, or use in security applications.

CS595D is a graduate computer science seminar that will explore topics in AI safety and bias in machine learning. These are both fundamental problems in AI …

AWS Documentation » Amazon Machine Learning » Developer Guide » Training ML Models Training ML Models The process of training an ML model involves providing an ML algorithm (that is, the learning algorithm ) with training data to learn from.

The next key concept is… The bias-variance tradeoff. As you add variables, interactions, relax linearity assumptions, add higher-order terms, and generally make your model more complex, your model should eventually fit the in-sample data pretty well.

MLlib Scalable Machine Learning on Spark

One focus of the machine learning research community has been on developing models that make accurate predictions, as progress was in part measured by results on benchmark datasets. In this context, accuracy denotes the fraction of test inputs that a model processes correctly—the proportion of

Stealing Machine Learning Models via Prediction APIs Revue

05/13/16: Stealing Machine Learning Models via Prediction APIs is accepted to USENIX Security’16. Media Coverage Cornell’s Town Crier Acquired By Chainlink To Expand Decentralized Oracle Network by Forbes on Nov 1, 2018, featured Town Crier

How to steal the mind of an AI Machine-learning models

Stealing Machine Learning Models via Prediction APIs CORE

David Wagner on Adversarial Machine Learning at ACM CCS’17

Stealing Machine Learning Models via Prediction APIs UsenixSecurity’16 August 11th, 2016 Machine Learning (ML) Systems 2 (1) Gather labeled data

Stealing Machine Learning Models via Prediction APIs Revue

Last month, Florian Tramèr, a PhD student at Stanford, presented joint work with Fan Zhang, Ari Juels, Michael Reiter, and Thomas Ristenpart on “Stealing Machine Learning Models via Prediction

Machine Learning The High-Interest Credit Card of

Stealing Machine Learning Models via Prediction APIs Florian Tramer` EPFL Fan Zhang Cornell University Ari Juels Cornell Tech, Jacobs Institute Michael K. Reiter

GitHub RandomAdversary/Awesome-AI-Security #AISecurity

11/10/2015 · IEDB recommended a consensus approach on its server. 35, 62, 63 In IEDB, the binding prediction is made from four PSSM-based models for Class I HLAs, while for Class II HLAs, the result comes from nine models including several PSSM-based models and machine learning models.

Machine Learning Methods for Predicting HLA–Peptide

How to Steal a Predictive Model Posted on October 3, 2016 by Thomas W. Dinsmore 3 comments In the Proceedings of the 25th USENIX Security Symposium, Florian Tramer et. al. describe how to “steal” machine learning models via Prediction APIs.

David Wagner on Adversarial Machine Learning at ACM CCS’17

Data Security Data Privacy and the GDRP (article) DataCamp

Stealing Machine Learning Models via Prediction APIs Florian Tramèr, Fan Zhang, Ari Juels, Michael Reiter and Thomas Ristenpart USENIX Security Symposium, 2016.

introduce “Stealing Machine Learning Models via Prediction

Machine learning for financial market prediction

Researchers Show How to “Steal” AI from Amazon’s Machine

Most of the machine learning models that are of interest to social scientists are supervised learning models. Here the practice is to segment the data into two groups: the training set , which is used to build the model, and the test set , which is used to judge the model’s performance.

Empirical Asset Pricing via Machine Learning by Shihao Gu

Stealing Machine Learning Models via Prediction APIs Florian Tramer` EPFL Fan Zhang Cornell University Ari Juels Cornell Tech, Jacobs Institute Michael K. Reiter

Prediction Serving GitHub Pages

Making Predictions over HTTP with R the raybuhr blog

Stealing Machine Learning Models via Prediction APIs arXiv

Machine Learning for Financial Market Prediction Tristan Fletcher PhD Thesis Computer Science University College London . Declaration I, Tristan Fletcher, confirm that the work presented in this thesis is my own. Where information has been derived from other sources, I confirm that this has been indicated in the thesis. 1. Abstract The usage of machine learning techniques for the prediction

MLlib Scalable Machine Learning on Spark

Stealing Machine Learning Models via Prediction APIs arxiv.org. The title probably already explains the idea: This paper collects techniques to ‘steal’ models that are exposed through a query interface without actually knowing anything about the models architecture.

Stealing Machine Learning Models via Prediction APIs

Deep Learning Weekly 🤖 – Issue #82 Stealing Models 3D

Predicting the Price of Used Cars using Machine Learning

Machine learning models also have privacy vulnerabilities. Research has shown that training data can be reconstructed from the model. The principle behind model inversion is simple: using features

AI in 2018 for researchers – Good Audience

Knockoff Nets Stealing Functionality of Black-Box Models

Last month, Florian Tramèr, a PhD student at Stanford, presented joint work with Fan Zhang, Ari Juels, Michael Reiter, and Thomas Ristenpart on “Stealing Machine Learning Models via Prediction

MLaaS Machine Learning as a Service

Poisoning Machine Learning Based Wireless IDSs via

[1609.02943] Stealing Machine Learning Models via

multiple machine learning models. Model stacking is an efficient ensemble method in which the Model stacking is an efficient ensemble method in which the predictions that are generated by using different learning algorithms are used as inputs in a second-level

Fan Zhang

GitHub Pages AI safety and bias in machine learning

Machine Learning From Streaming Data Two Problems Two

Machine learning models also have privacy vulnerabilities. Research has shown that training data can be reconstructed from the model. The principle behind model inversion is simple: using features

“Machine Learning Techniques—Reductions Between Prediction

How to Steal a Predictive Model ML/AI

PDF; Abstract. Machine Learning (ML) models are increasingly deployed in the wild to perform a wide range of tasks. In this work, we ask to what extent can an adversary steal functionality of such “victim” models based solely on blackbox interactions: image in, predictions out. In contrast to prior work, we present an adversary lacking knowledge of train/test data used by the model, its

Empirical Asset Pricing via Machine Learning by Shihao Gu

Machine Learning for Investors A Primer Alpha Architect

Stealing Machine Learning Models via Prediction APIs Wired magazine just published an article with the interesting title How to Steal an AI, where the author explores the… blog.bigml.com Attacking Machine Learning with Adversarial Examples

Fan Zhang

Stealing Machine Learning Models via Prediction APIs UsenixSecurity’16 August 11th, 2016 Machine Learning (ML) Systems 2 (1) Gather labeled data

Stealing Machine Learning Models via Prediction APIs CORE

Poisoning Machine Learning Based Wireless IDSs via

Machine learning models also have privacy vulnerabilities. Research has shown that training data can be reconstructed from the model. The principle behind model inversion is simple: using features

How to steal the mind of an AI Machine-learning models

How to Steal a Predictive Model ML/AI

Fan Zhang

Machine Learning – use computers to probe the data for structure, even if we do not have a theory of what that structure looks like. Deep learning – combines advances in computing power and …

How does SAS Support Machine Learning Dartmouth College

How Cybercriminals are Attacking Machine Learning Lastline

Last month, Florian Tramèr, a PhD student at Stanford, presented joint work with Fan Zhang, Ari Juels, Michael Reiter, and Thomas Ristenpart on “Stealing Machine Learning Models via Prediction

Machine Learning for Investors A Primer Alpha Architect

Stealing Machine Learning Models via Prediction APIs [1609.02943] Stealing Machine Learning Models via Prediction APIs Abstract: Machine learning (ML) models may be deemed confidential due to their sensitive training data, commercial value, or use in security applications.

Empirical Asset Pricing via Machine Learning by Shihao Gu

Python implementation of extraction attacks against Machine Learning models, as described in the following paper: Stealing Machine Learning Models via Prediction APIs Florian Tramèr, Fan Zhang, Ari Juels, Michael Reiter and Thomas Ristenpart

David Wagner on Adversarial Machine Learning at ACM CCS’17

arXiv Paper Spotlight Why Does Deep and Cheap Learning

Stealing Machine Learning Models via Prediction APIs Wired magazine just published an article with the interesting title How to Steal an AI, where the author explores the… blog.bigml.com Attacking Machine Learning with Adversarial Examples

[1609.02943] Stealing Machine Learning Models via

The End of Intellectual Property? – Daniel Tunkelang – Medium

Many machine learning models in recent years have been released as open source software, because the companies developing them want users to improve the code and to deploy the models on their

How Cybercriminals are Attacking Machine Learning Lastline

Machine Learning Methods for Predicting HLA–Peptide

AI in 2018 for researchers – Good Audience

In a paper utilizing classical machine learning techniques [1], a student at University of Wisconsin at Madison topped out prediction at 66.81% utilizing a maximum likelihood method.

arXiv Paper Spotlight Stealing Machine Learning Models

ADAPTIVE TASK SCHEDULING USING LOW-LEVEL RUNTIME APIs

Tutorial: Gaussian process models for machine learning Ed Snelson (snelson@gatsby.ucl.ac.uk) Gatsby Computational Neuroscience Unit, UCL 26th October 2006

Stealing Machine Learning Models via Prediction APIs Revue

multiple machine learning models. Model stacking is an efficient ensemble method in which the Model stacking is an efficient ensemble method in which the predictions that are generated by using different learning algorithms are used as inputs in a second-level

Fan Zhang

Machine Learning Techniques—Reductions Between Prediction Quality Metrics 3 to another, for example). The space that examples live in is called the feature space,

GitHub Pages AI safety and bias in machine learning

Oblivious Neural Network Predictions via MiniONN

Stealing Machine Learning Models via Prediction APIs arXiv

AWS Documentation » Amazon Machine Learning » Developer Guide » Training ML Models Training ML Models The process of training an ML model involves providing an ML algorithm (that is, the learning algorithm ) with training data to learn from.

Researchers Show How to “Steal” AI from Amazon’s Machine

Predicting Results for Professional Basketball Using NBA

Personalized Effect of Health Behavior on Blood Pressure

In a paper they released earlier this month titled “Stealing Machine Learning Models via Prediction APIs,” a team of computer scientists at Cornell Tech, the Swiss institute EPFL in Lausanne

Predicting the Price of Used Cars using Machine Learning

Machine Learning From Streaming Data Two Problems Two

David Wagner on Adversarial Machine Learning at ACM CCS’17

Stealing Machine Learning Models via Prediction APIs arxiv.org. The title probably already explains the idea: This paper collects techniques to ‘steal’ models that are exposed through a query interface without actually knowing anything about the models architecture.

Empirical Asset Pricing via Machine Learning by Shihao Gu

ADAPTIVE TASK SCHEDULING USING LOW-LEVEL RUNTIME APIs

Predicting the Price of Used Cars using Machine Learning

More sophisticated machine learning models (that include non-linearities) seem to provide better prediction (e.g., lower MSE), but their ability to generate higher Sharpe ratios is questionable. Complex machine learning models require a lot of data and a lot of samples.

GitHub RandomAdversary/Awesome-AI-Security #AISecurity

Predicting the Price of Used Cars using Machine Learning

Machine learning for financial market prediction

Machine Learning for Financial Market Prediction Tristan Fletcher PhD Thesis Computer Science University College London . Declaration I, Tristan Fletcher, confirm that the work presented in this thesis is my own. Where information has been derived from other sources, I confirm that this has been indicated in the thesis. 1. Abstract The usage of machine learning techniques for the prediction

Oblivious Neural Network Predictions via MiniONN